OpenAI combined a powerful AI model and a chatbot experience—and the rest is history. But ChatGPT may not be best for your workflow, especially when you want to automate your AI prompts with complete flexibility.

With the OpenAI API, you can automate what you’re doing manually, sending one or multiple requests and getting the responses right where you’re working—be that a custom-coded solution or no-code app builder. This works both for internal tools where you’re stitching more tasks together with AI, or products you’re building for external users.

There are two ways to integrate the OpenAI API into your apps and internal tools. The easiest path is via Zapier: you can send data from over 8,000 work apps to ChatGPT and get the results wherever you need them.

But this guide shows you how to connect to the OpenAI API directly for complete control over requests and responses, including a step-by-step tutorial.

Table of contents:

What is the OpenAI API?

The OpenAI API is an interface that offers connectors between OpenAI’s AI models and your apps. When you use the API, you can pass your instructions—often with deeper settings than a ChatGPT prompt—and get the response back on the platform you’re using to make the call. You can exchange messages, generate videos or images, and access audio generation, among other possibilities.

OpenAI API models

OpenAI offers a model collection that covers a wide range of workflows and use cases. Here are the main model series and what they’re best for:

-

GPT-5.2, the best model for coding and agentic tasks. There are other versions available, such as GPT-5 or GPT-4.1.

-

gpt-oss, a open-weight model that you can use via the API, download, tune, run locally, or self-host on your own hardware or cloud.

-

GPT Image 1.5, the latest image generator model and successor to DALL-E 3.

-

GPT-4o Transcribe, one of the most popular models for speech-to-text.

-

Sora for AI video generation.

-

Whisper, a general-purpose speech recognition model.

For a full list of all available models, refer to the documentation on OpenAI models.

What can you do with the OpenAI API?

|

Category |

Features |

|---|---|

|

Generative and multimodal |

Text + vision reasoning: Chat, analyze, extract, and summarize across text and images Real-time voice + live multimodal: Run low-latency, speech-to-speech interactions and stream transcription in real time Audio (TTS/STT): Convert speech to text without needing a full realtime session Image generation + editing: Generate images and iteratively edit them over multiple turns Video generation: Create videos from prompts (and iterate when supported) |

|

Agents and actions |

Tool / function calling: Let the model call your functions and systems to take actions Built-in tools: Use integrated capabilities like web search, file search, computer use, code interpreter, and remote MCP connections Structured outputs: Return schema-valid JSON suitable for automation and reliable parsing Code interpreter containers: Run code in an isolated execution environment (implementation detail) |

|

Data, retrieval, and customization |

Files + uploads: Provide documents/media as inputs for analysis and generation Knowledge bases / RAG: Ground answers on your data using vector stores and file search Embeddings: Turn content into vectors for semantic search, clustering, and recommendations Fine-tuning: Customize model behavior for your domain, tone, or tasks |

|

Quality and safety |

Evals + graders: Measure quality and run regression tests on model behavior Moderation: Check text and images for policy/safety risks |

|

Scale and cost control |

Batch processing: Run large async workloads more cost-effectively |

|

UI / experience builder |

ChatKit: Ship a drop-in chat UI connected to agent workflows |

OpenAI API pricing

Here’s a snapshot of the pricing of OpenAI’s state-of-the-art models.

|

Model |

Type |

Price |

|---|---|---|

|

GPT-5.2 |

Text, reasoning, agentic |

Input: $1.75 Output: $14.00 (Per million tokens [~750k words]) |

|

GPT Image 1.5 |

Image generation |

High quality 1536×1024 image: $0.20 each |

|

Sora 2 |

Video generation |

720p video: $0.10 per second |

See individual pricing by selecting any model from the OpenAI models page, and full pricing information for all features in the pricing page.

Use Zapier to connect to OpenAI

Before diving into how API calls work, let’s look at a faster option if you need to integrate OpenAI models with your apps right away. Zapier is an automation platform that connects over 8,000 apps without code—including OpenAI. It handles the technical setup so you don’t have to manage calls and responses yourself.

Zapier acts as a universal API connector. Rather than digging through Notion API docs, figuring out how to pull HubSpot contacts, or formatting data for your data analysis tool, Zapier has already solved those problems. The platform maintains all its integrations and handles each service’s quirks, so you can focus on building rather than connecting.

And because it’s a no-code tool, non-technical teams can build their own automations and internal tools while engineers audit workflows, set permissions, and identify which automations deserve deeper integration—all while freeing up time for bigger problems.

Here are some pre-built workflows (called Zaps) that show what Zapier and OpenAI are capable of.

You can also use Zapier MCP to trigger these kinds of workflows while you’re chatting with ChatGPT, so you never even have to leave the chatbot. Learn more about how to automate ChatGPT with Zapier and how to use ChatGPT with Zapier MCP.

Zapier is the most connected AI orchestration platform—integrating with thousands of apps from partners like Google, Salesforce, and Microsoft. Use forms, data tables, and logic to build secure, automated, AI-powered systems for your business-critical workflows across your organization’s technology stack. Learn more.

Tutorial: How to set up OpenAI API connections

Grab some snacks, set your phone to Do Not Disturb, and let’s start cooking.

Part 1: Preparation

Part 2: Calling the OpenAI API

Part 3: Building with the OpenAI API

Step 1: Before you begin

Before we jump in, here’s what you need to have and know to make it to the end quickly:

-

The basics of what an API is—read Zapier’s guide on how to use an API for a refresher.

-

What JSON is: This is used to format API call bodies. If you’re lost, ChatGPT can help you spot errors or fix formatting.

-

15 to 30 minutes of focused time.

-

A credit card to buy OpenAI API credits (minimum $5).

Optional: a Postman account (free) for following along and making your first calls.

Step 1: Create an OpenAI account

(2.1) Head to the OpenAI platform and create or log in to your OpenAI account. If you’re already a ChatGPT user, you can use the same account credentials—but your paid subscription doesn’t apply to the API, so you’ll still have to buy credits.

Step 3: Create an OpenAI API key

The OpenAI API isn’t public: you need a key to identify yourself.

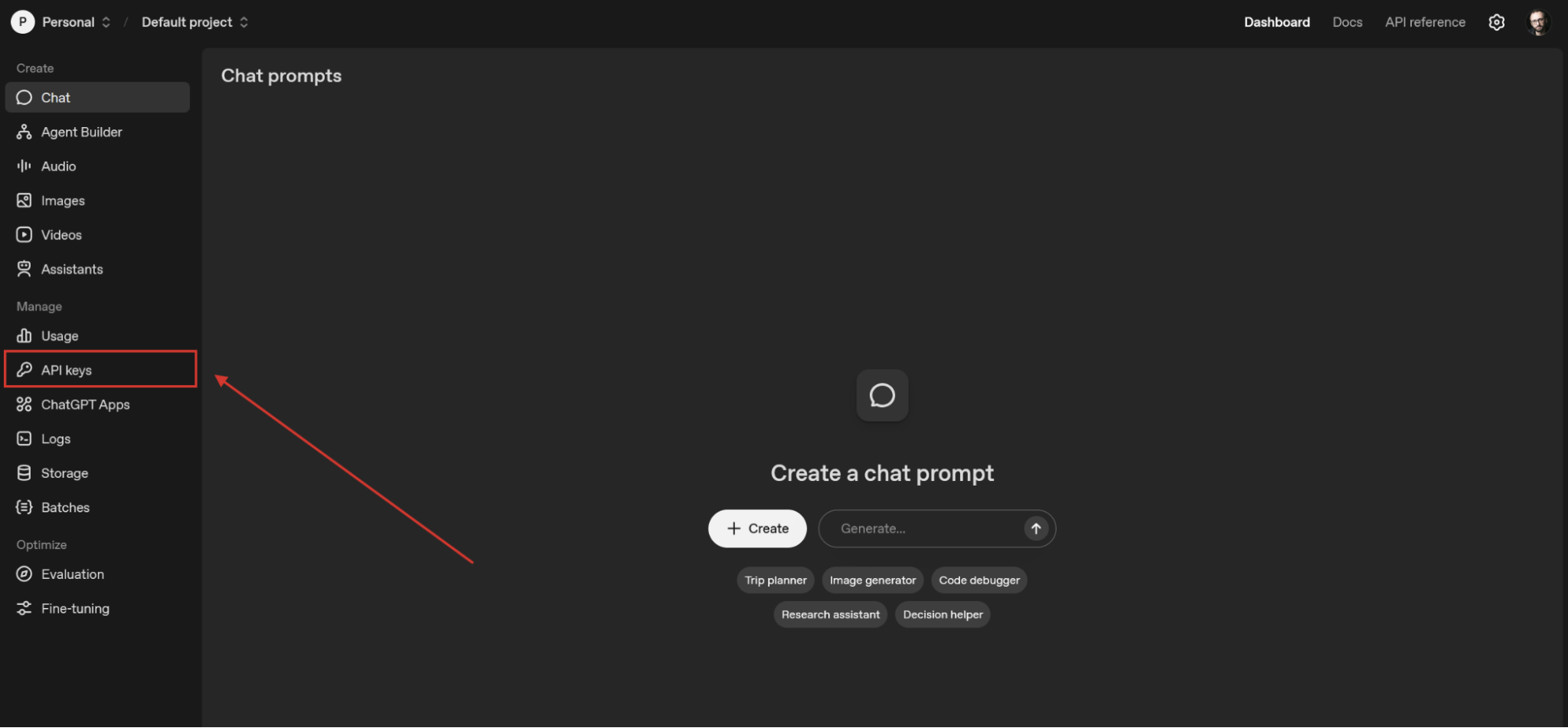

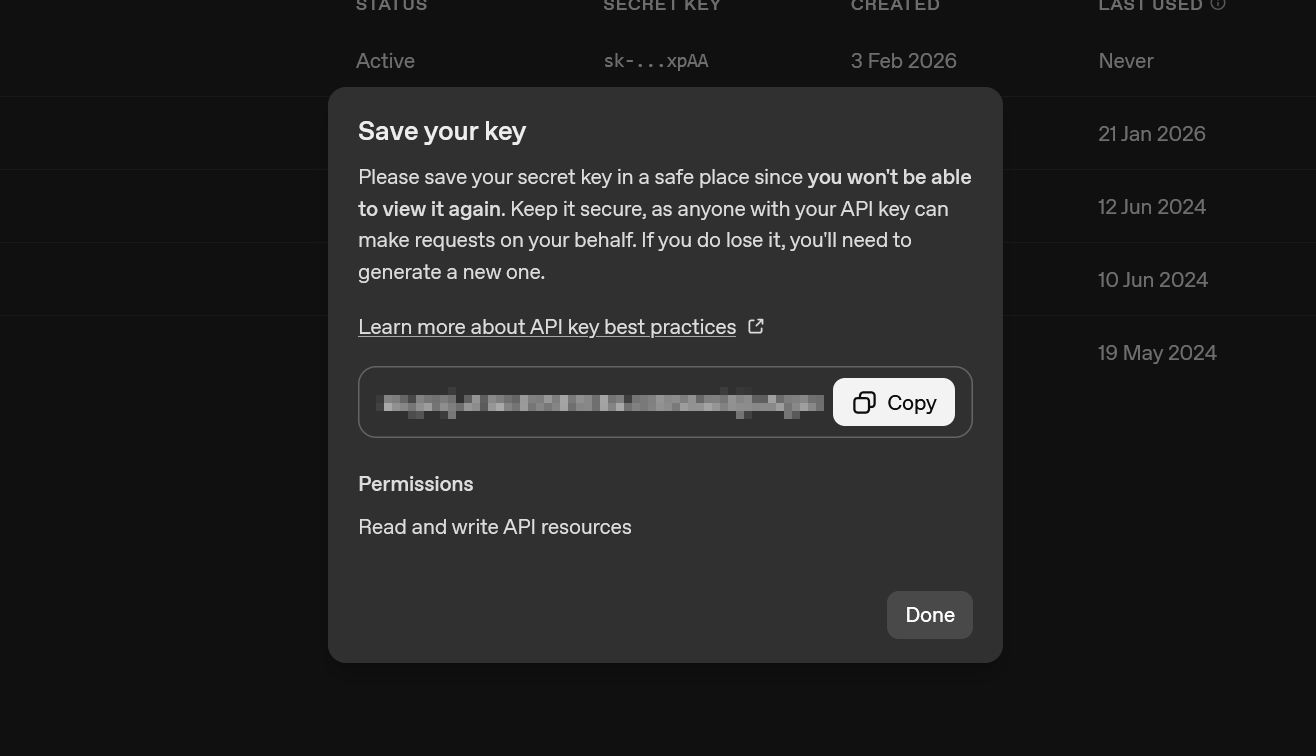

(3.1) On the left side menu, click API keys.

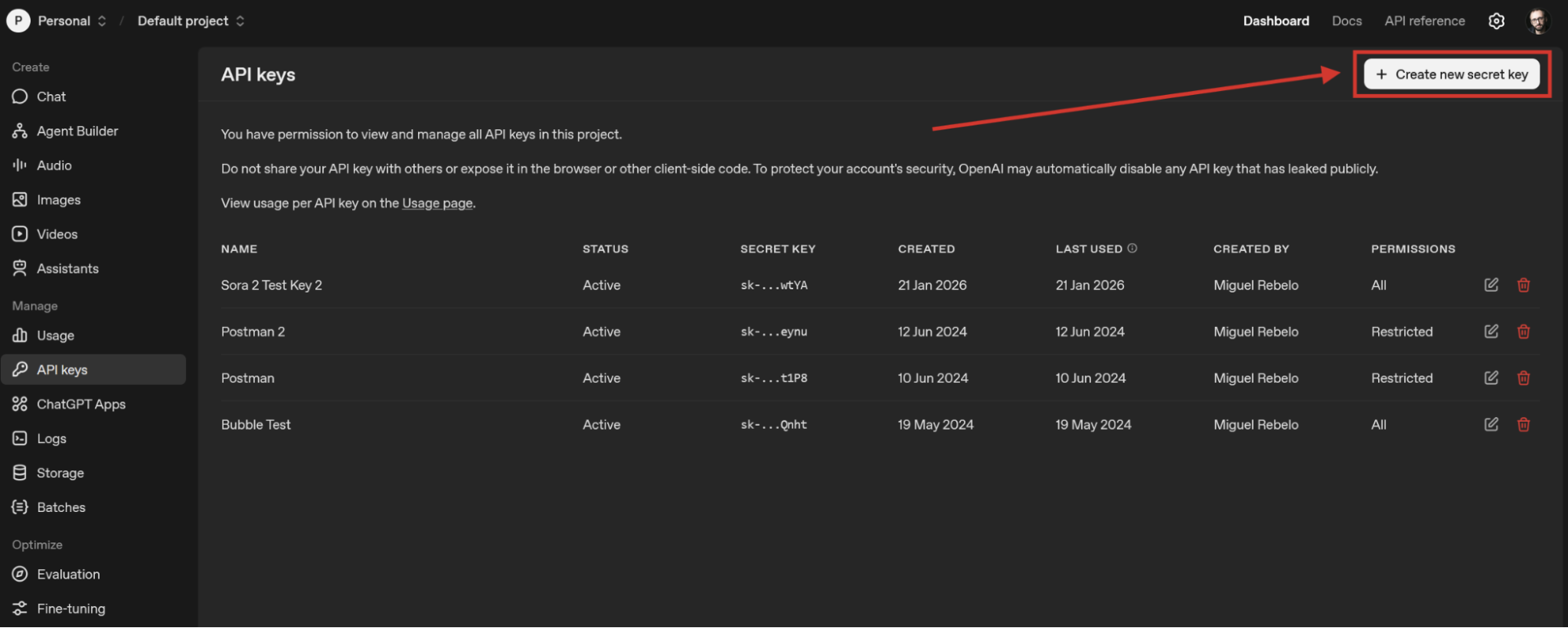

(3.2) Click Create new secret key.

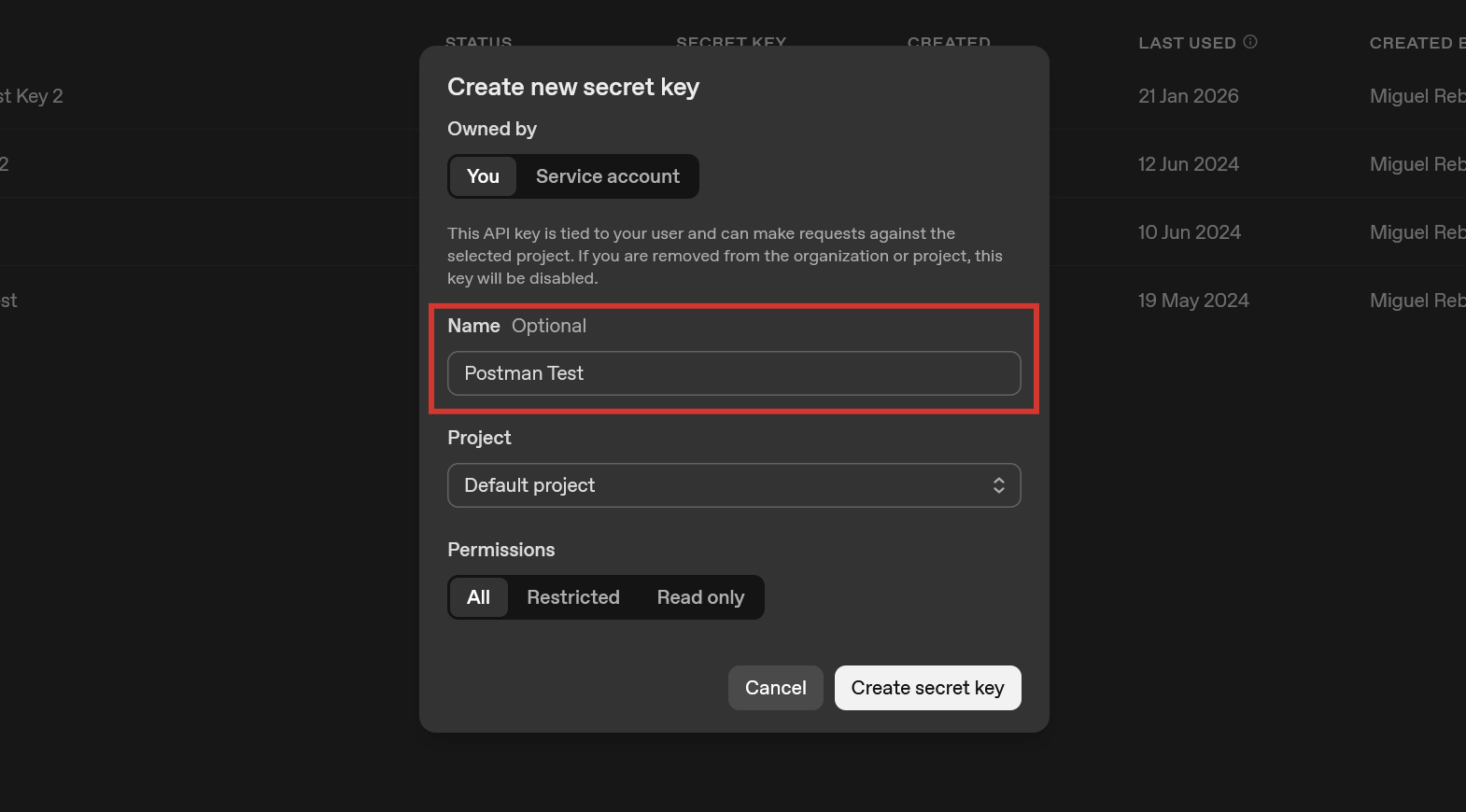

(3.3) Name your API key. For this tutorial, I’ll use “Postman Test” and leave all other settings as they are.

This pop-up includes three other settings:

-

Key owner: Setting the owner to you will tie this key to your user account. Choose this if you’re developing locally, integrating it in quick scripts or tools, or running experiments. Service accounts are independent from users and are better suited when you’re integrating the key into a bot or AI-powered web service.

-

Project: You can associate new keys with projects within the OpenAI platform. This organizes your keys and adds project-level tracking for usage and costs.

-

Permissions: This determines which OpenAI platform features the key can access. When using the key in a real project, I recommend that you restrict usage to features that you won’t need. For example, if you’re building a text-based chatbot, you can restrict image generation, videos, and all other endpoints that aren’t related to text.

(3.4) Copy the API key and save it somewhere safe—you won’t be able to see it again once you close the pop-up.

Very important: Regardless of the settings here, you need to keep this API key safe at all times. If someone finds your key, they could use it themselves, consuming credits, ruining training jobs you have going on, or even using it as an attack vector if you have OpenAI Assistants plugged into your systems. If this happens, you can discard the affected key and generate a new one. Don’t share this key with anyone who doesn’t need it, and if you’re publishing an app to the public web, be sure to read up on your API security best practices.

Once you click Done, the new key is added to the list. You can always delete or edit the name or permissions going forward.

Step 4: Add credits to your OpenAI account

You’ll need to pay for each generation job request you send to the OpenAI API—even if you’re a ChatGPT subscriber—so let’s add some credits to your account.

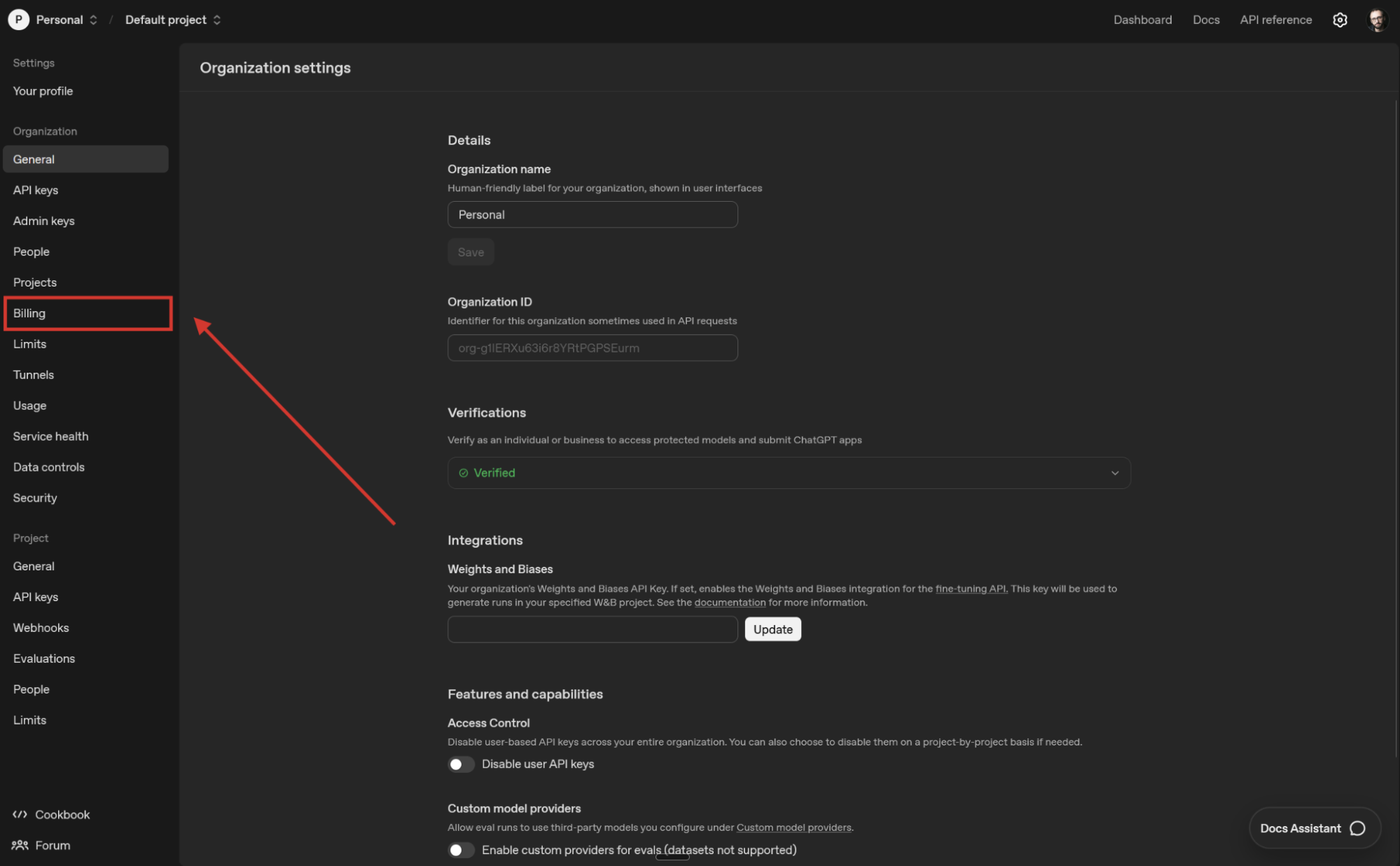

(4.1) Click the settings icon at the top right.

(4.2) Click Billing on the left.

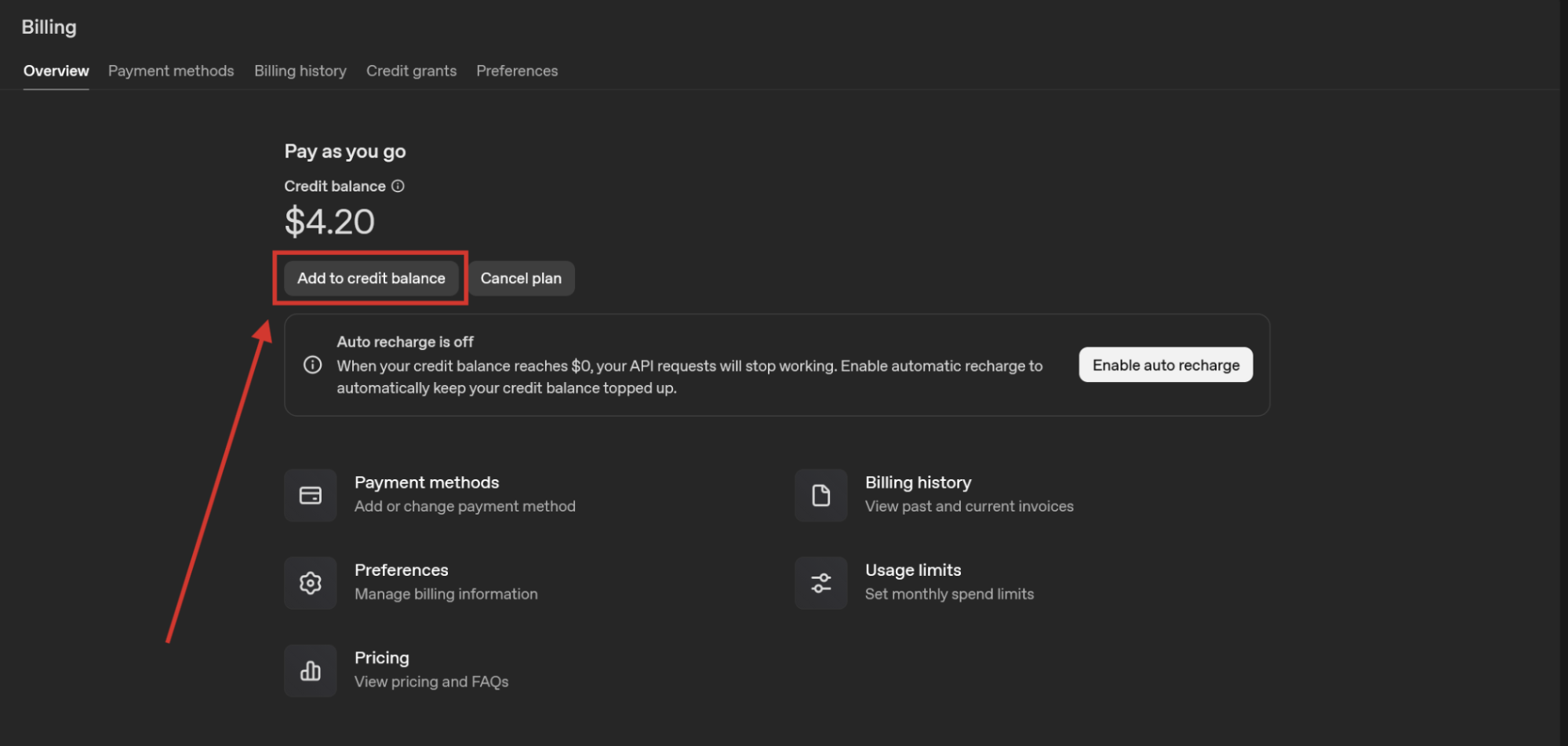

(4.3) Add credit balance to your account; this follows the same process as buying anything else online. You can add a minimum of $5 to get started. I already have some credits on my account, so your screen will look similar to mine below once you finish this step.

Step 5: Open the API documentation and reference pages

(5.2) Structuring and making API calls depends on OpenAI’s requirements for each request, which they share via two important resources:

-

The documentation page gives you a general overview of the API’s features and guides on how to use them.

-

The API reference page, a collection of technical instructions and specifications to help you structure your calls correctly.

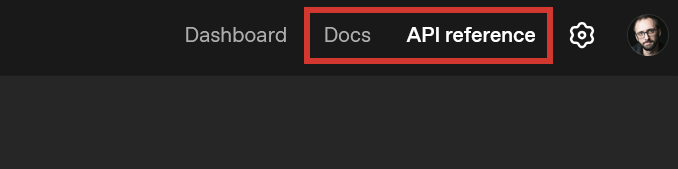

Always come back to these two resources whenever you’re troubleshooting an issue or brainstorming a new use case. You can quickly switch between both with the links at the top right of the screen.

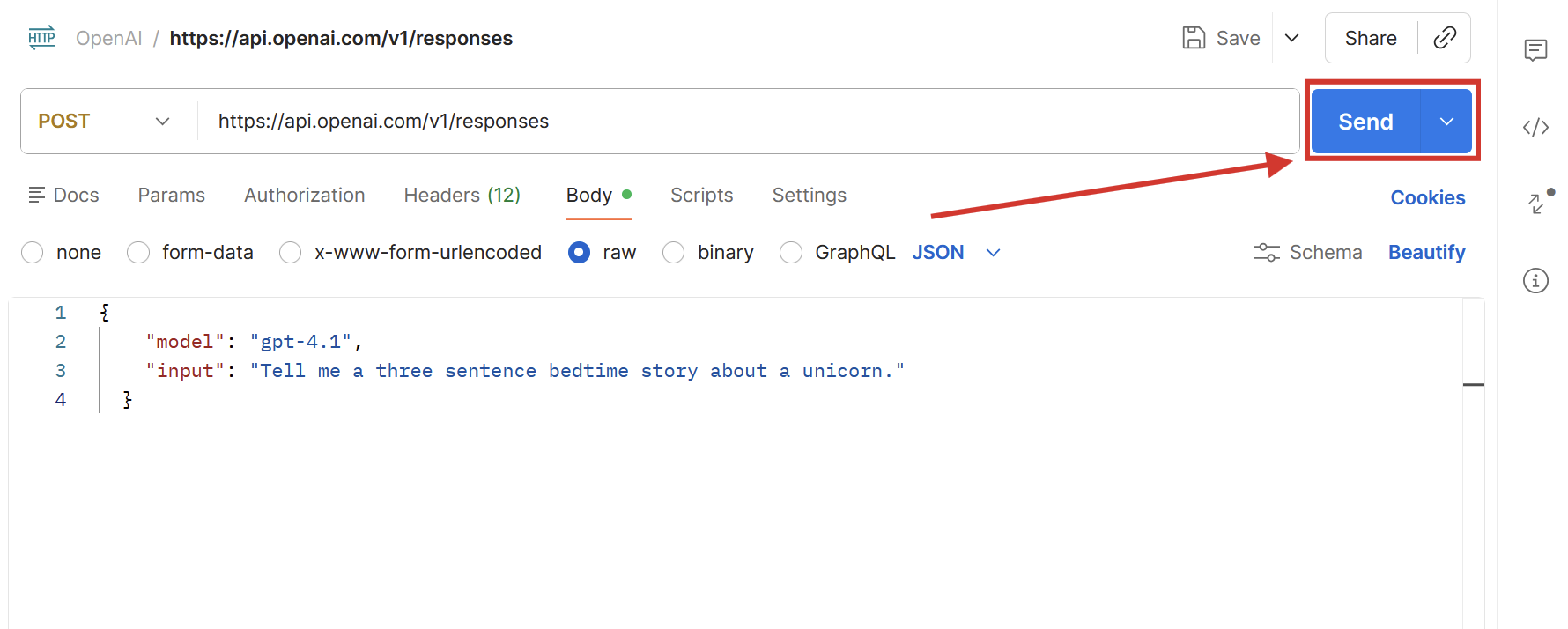

Step 6: Build a new request

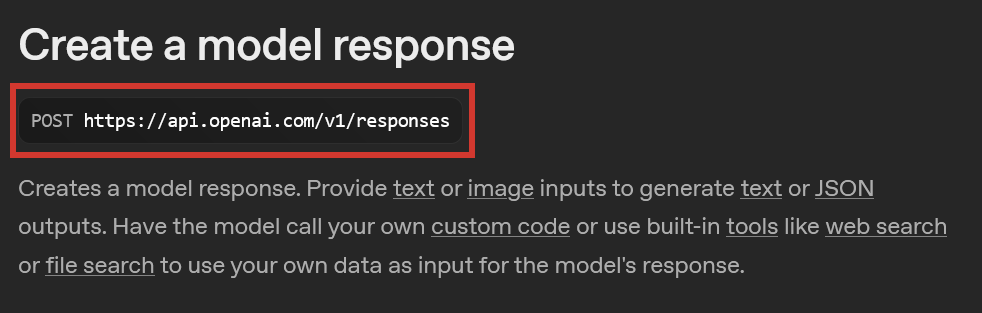

(6.1) For this tutorial, I’ll be using the Responses API to generate text. Open the Responses API reference page about this topic and keep it open on a browser tab if you’re following along.

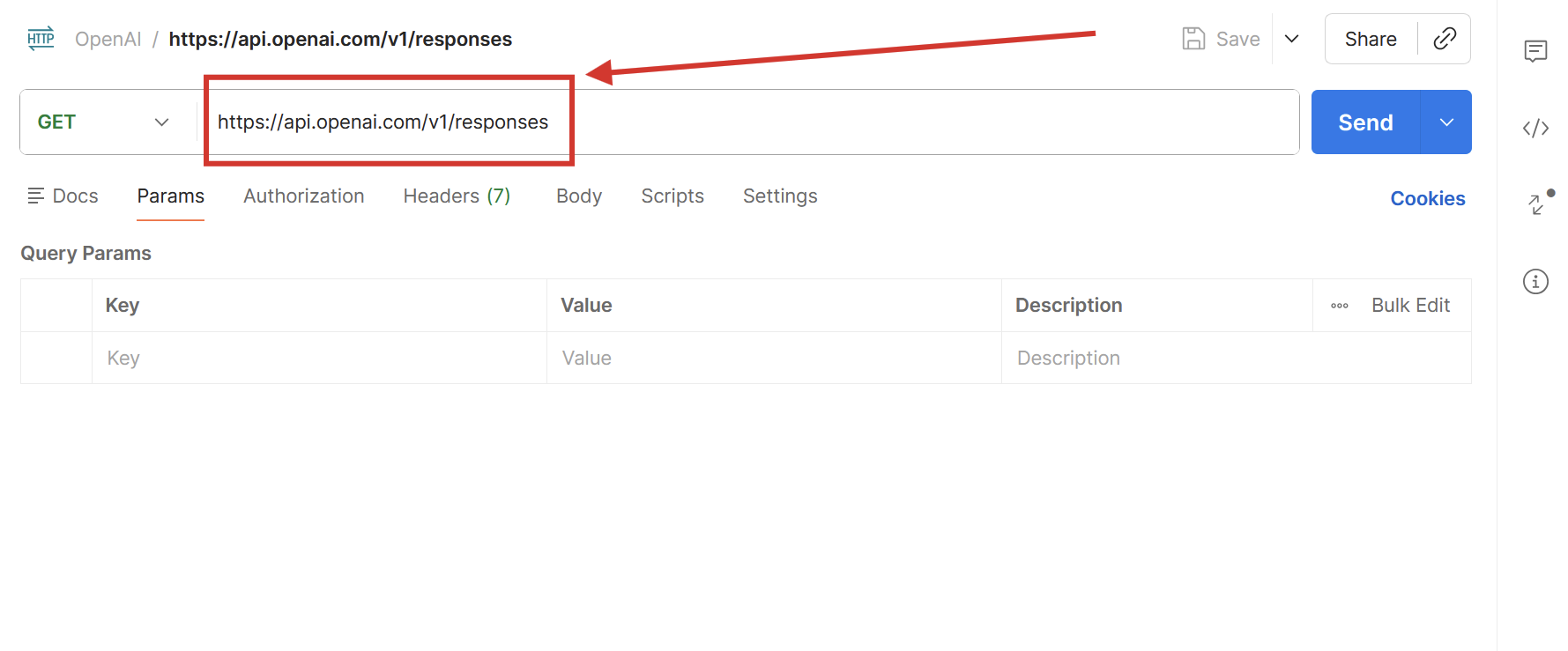

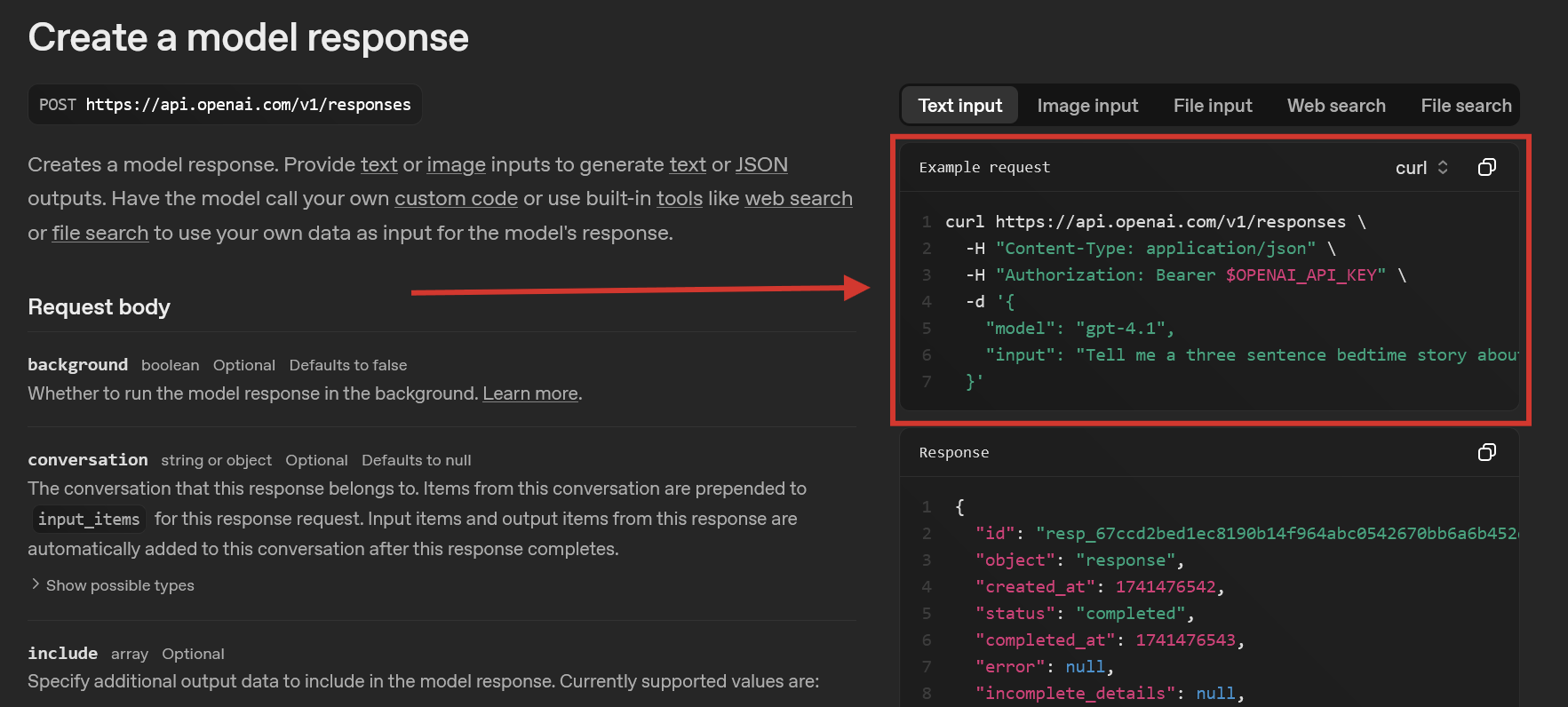

(6.2) Scroll down to the create a model response section. Under the header, notice the HTTP method and copy the URL endpoint (https://api.openai.com/v1/responses).

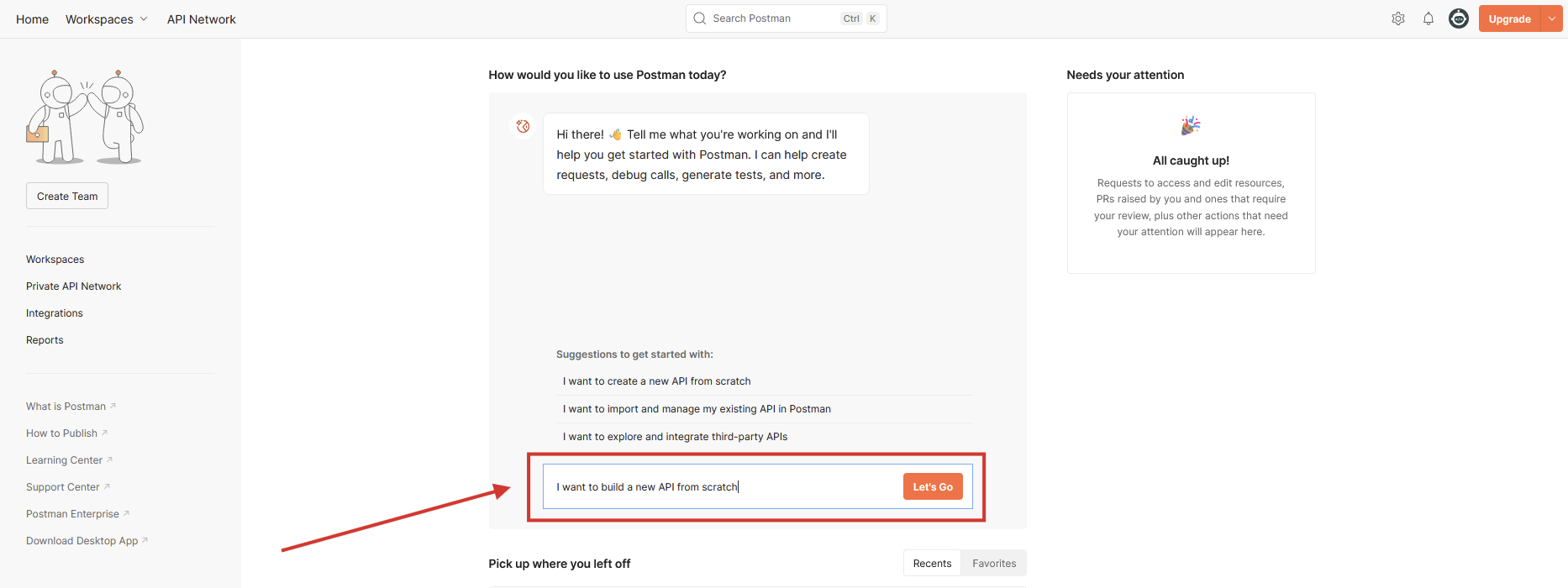

(6.3) Optional: If you’re following the tutorial, create your Postman account and head over to the dashboard. Write in the AI assistant chat that you want to create a new API from scratch. If you prefer to start with building your own tool right away, you can use ChatGPT to help you code or find your internal tool builder / no-code app builder documentation on API connections.

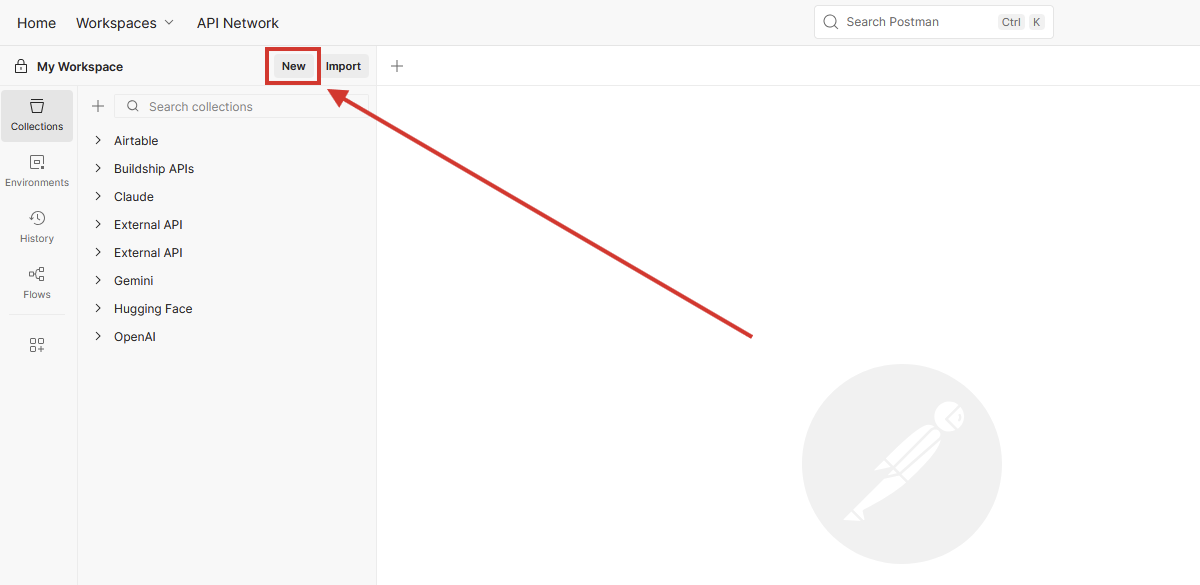

(6.4) In the Postman workspace screen, click New at the top left.

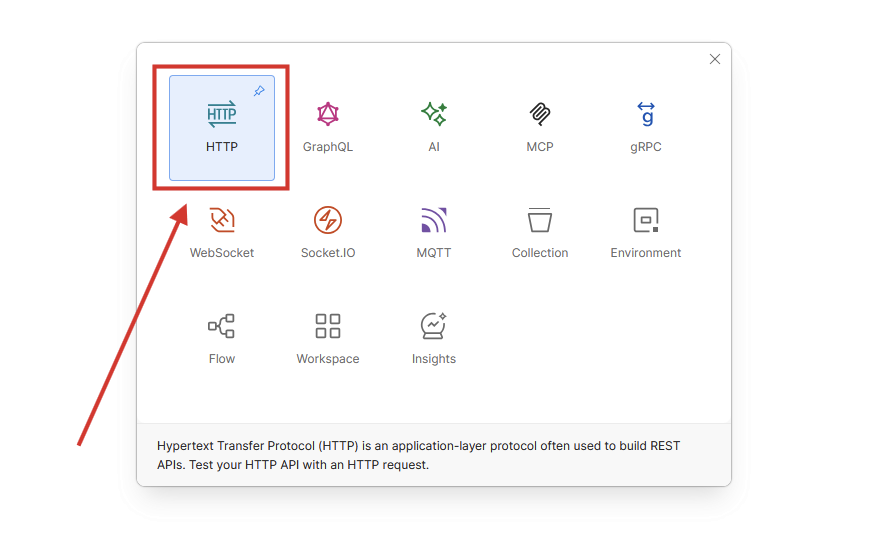

(6.5) Select HTTP.

(6.6) Paste the endpoint URL in the request’s input field.

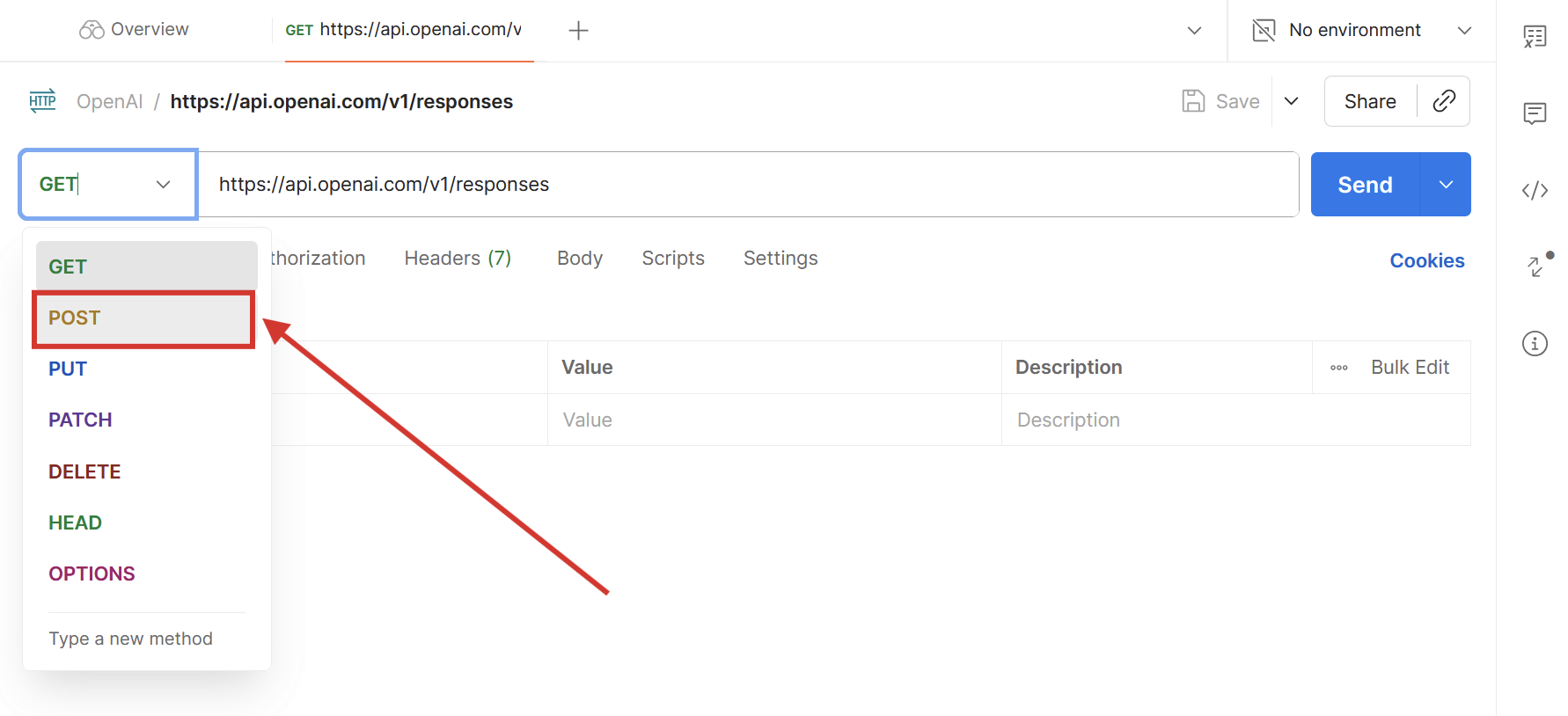

(6.7) Change the HTTP method of the call from GET to POST.

Step 7: Set up authentication and headers

The API won’t accept calls without knowing who’s making them. You need to pass the API key you created earlier to authenticate yourself.

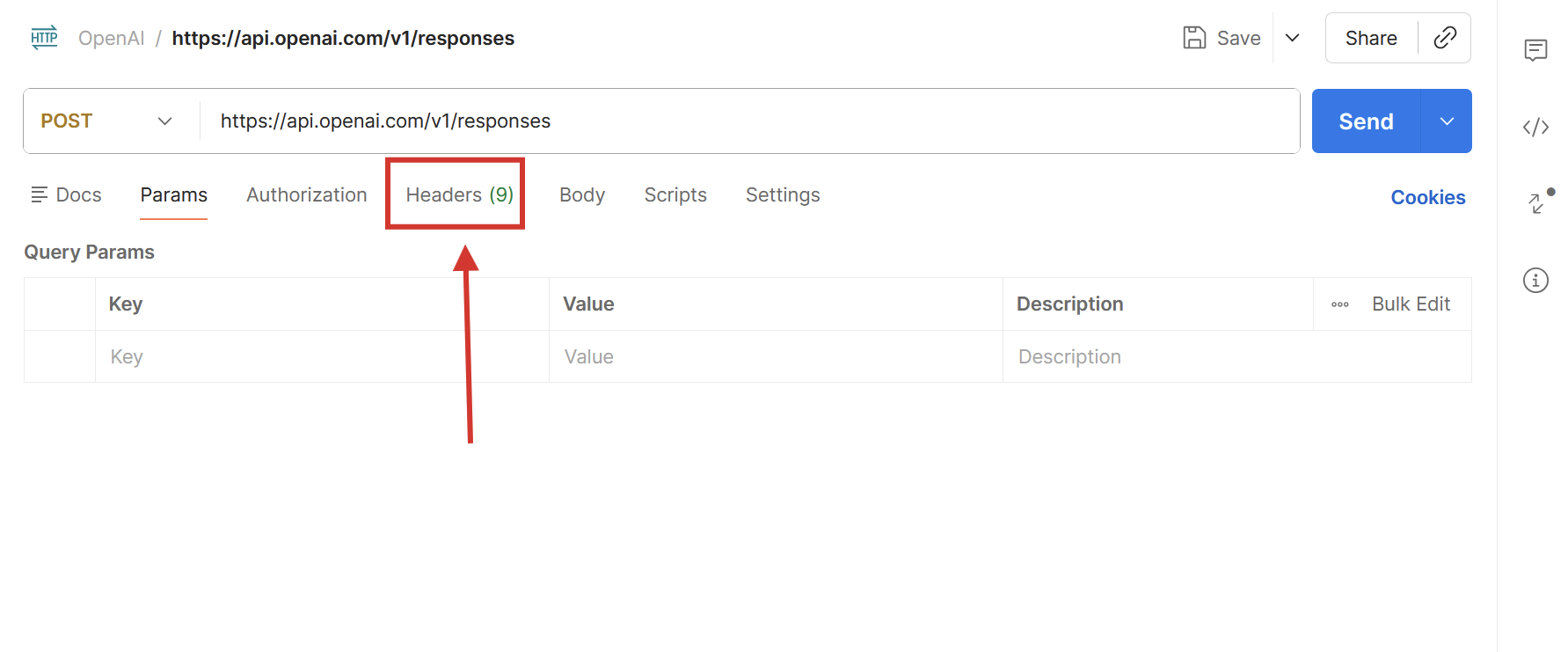

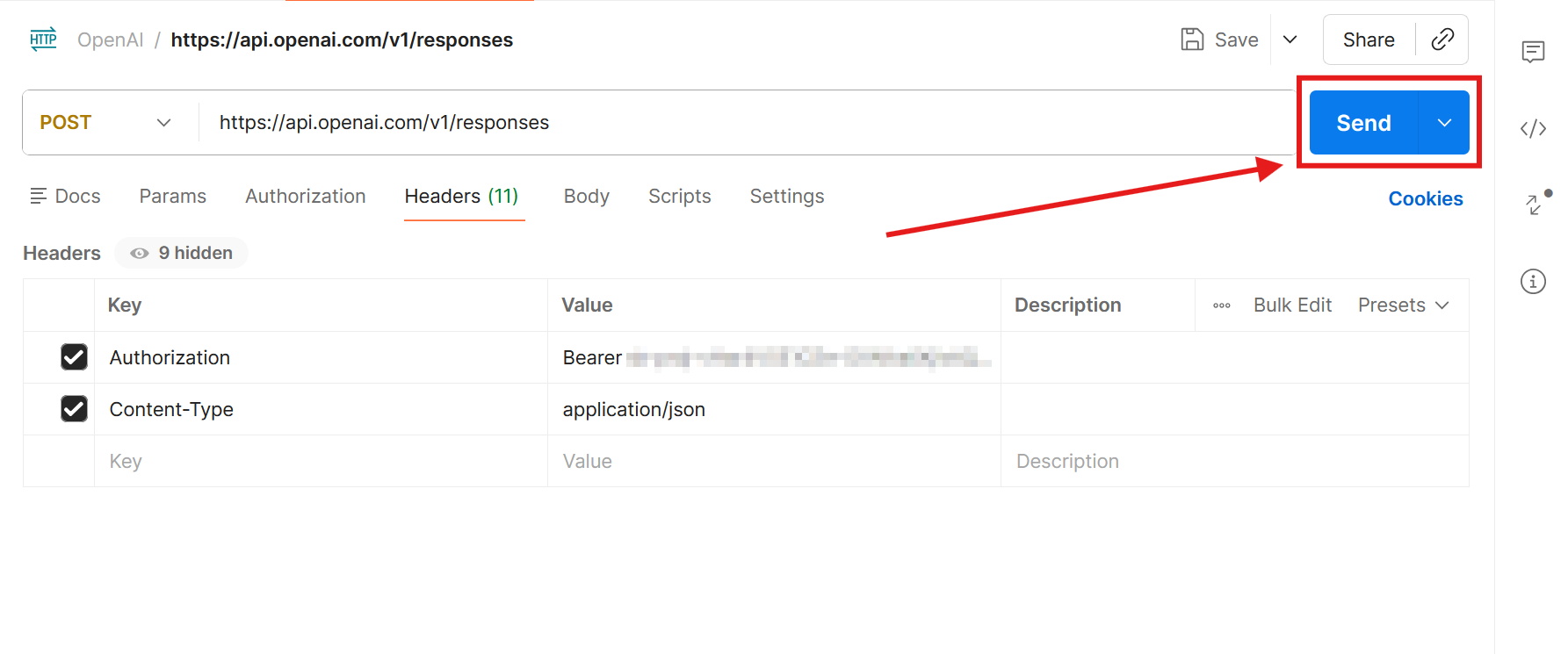

(7.1) The authentication section of the API reference says that the keys should be provided via the header in the HTTP Bearer authentication scheme. While Postman has a dedicated tab to handle this, let’s set this up closer to the structure you’ll use in a real project: click the Headers tab.

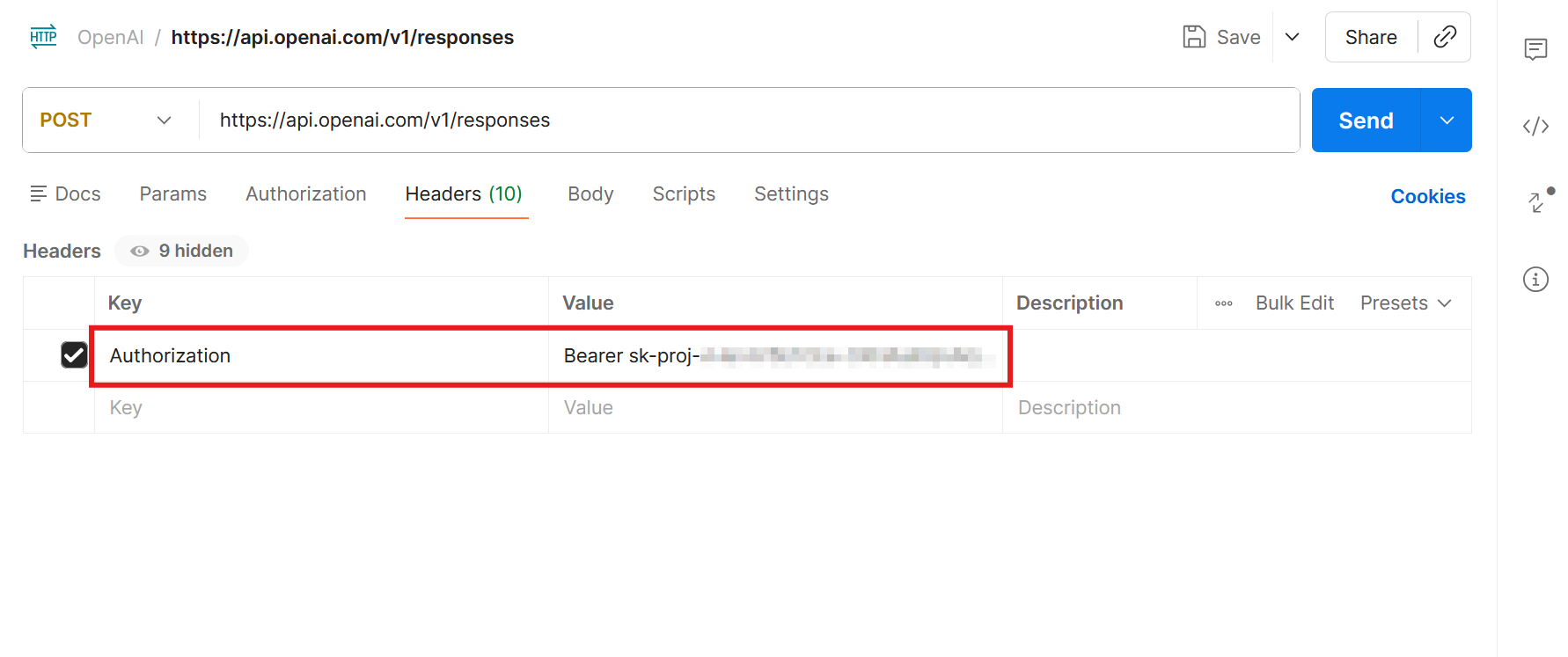

(7.2) Add Authorization to the key column. Then, in the value column, write Bearer, add a space, and paste your API key.

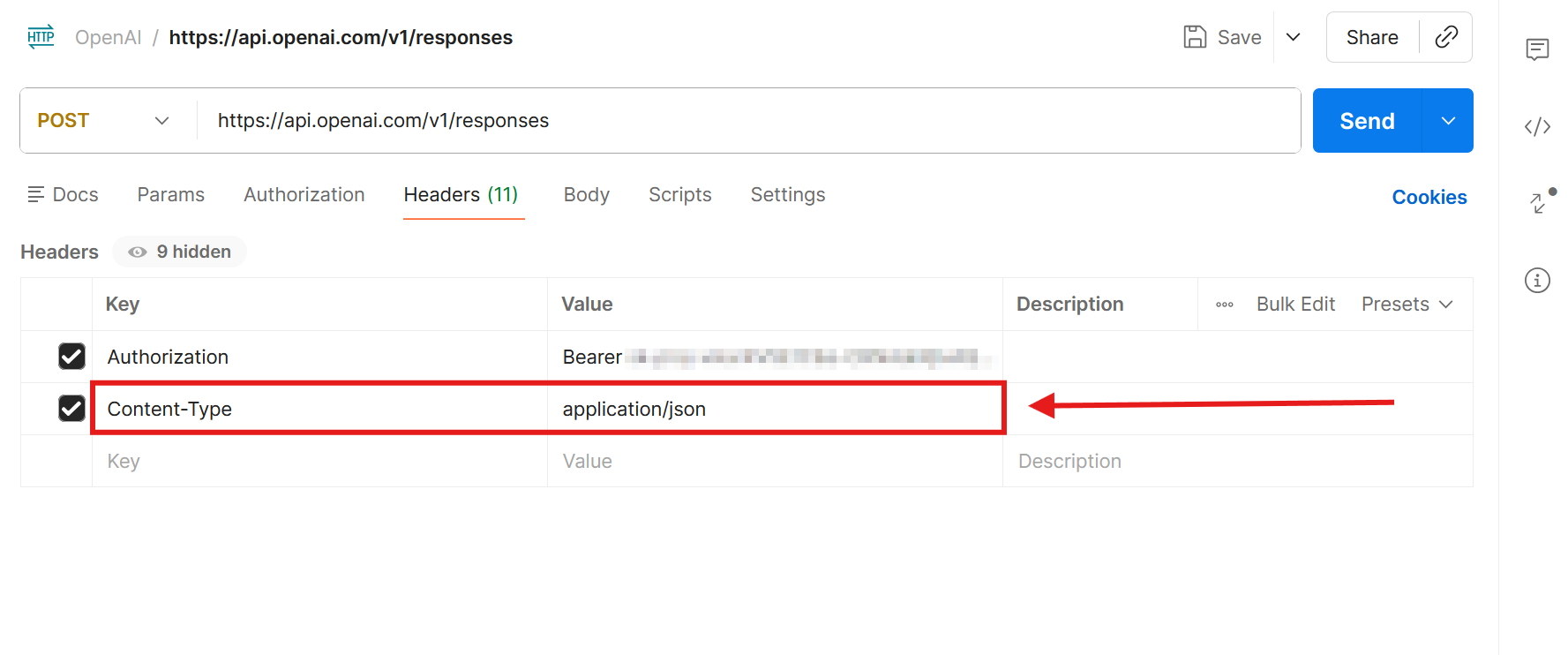

(7.3) Most APIs require a content type key/value pair in the header: OpenAI is one of them, as you can see in the create a model response API reference article‘s example request. Add Content-Type to a new row in the key column, and write application/json as the value.

Step 8: Troubleshooting and learning through mistakes

Configuring a new API for the first time is more hike up a mountain than strolling on the beach: it takes trial and error to set it up correctly. Struggling is normal: don’t get discouraged. Let’s make an intentional mistake now to understand how to handle errors in the future.

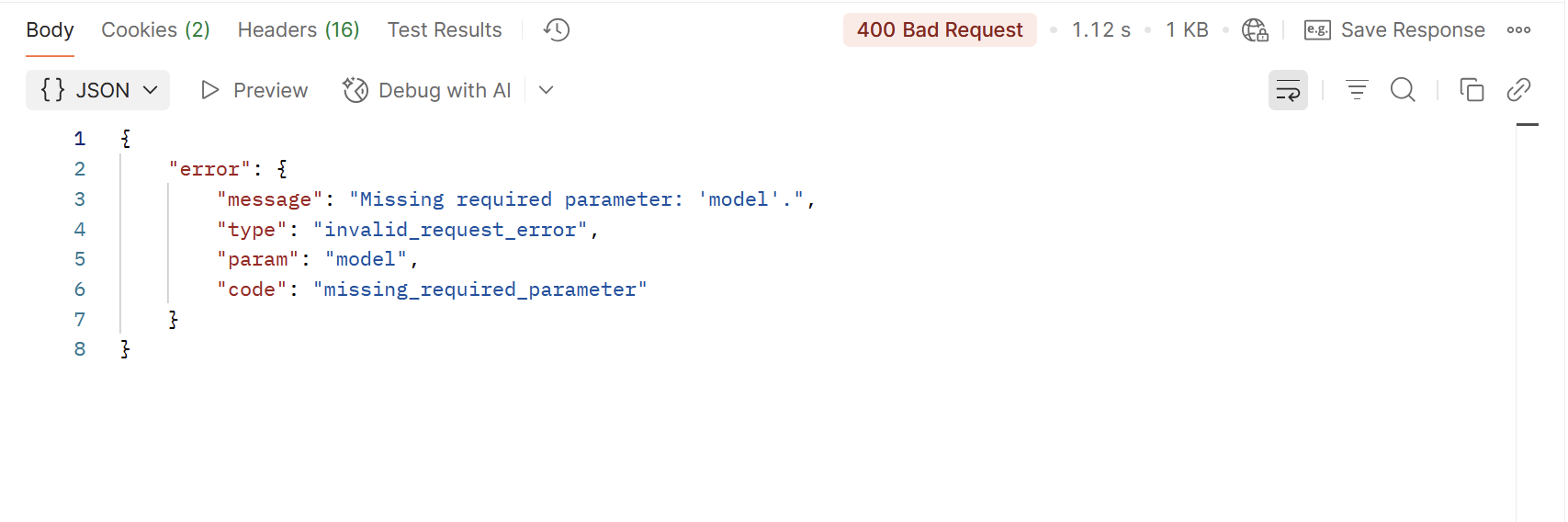

(8.1) Click the Send button, and see what happens.

(8.2) The response appears below the request interface. We have an error: HTTP 400 Bad Request. The output JSON tells us this request is missing the required parameter “model”.

Errors contain hints that help you investigate what’s not working. When you get stuck:

-

Check the syntax of the call. Something small, like an extra/missing comma or bracket, can easily break a request.

-

Go back to the API docs to understand the requirements for the endpoint.

-

Test as you build: call the API whenever you make a meaningful change, so you can easily spot what’s causing issues as you go.

Step 9: Add the request body

The API returned an error because we didn’t add a request body, only the headers.

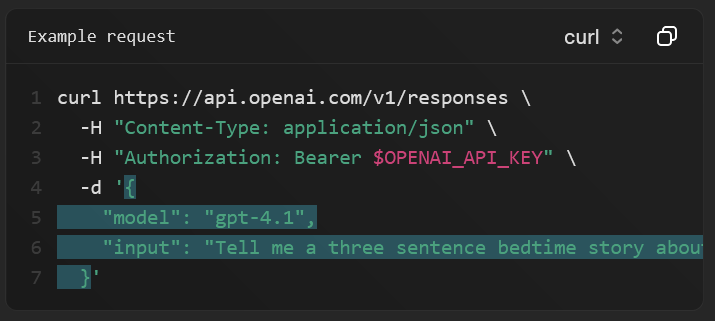

(9.1) Let’s go back to the Responses API reference article. Notice the example request on the right: this shows what the API expects to receive.

By default, the example request section shows a cURL snippet, useful for anyone interacting with the API via command line. That’s not what we’re doing in this tutorial: we’re using Postman. Still, this gives us important information on what each value means and where it should go.

curl

This cURL command points the system to the API’s endpoint URL. The backslashes at the end are there to improve readability for humans: they mean nothing when interpreted by the terminal. You’ll see it in other lines too.

-H "Content-Type: application/json" \\

-H "Authorization: Bearer $OPENAI_API_KEY" \\

Next, we have the headers, labeled with the -H flag. This request needs to pass the content type and the API key in the Bearer scheme. You already did this in a previous step in Postman.

-d '{

"model": "gpt-4.1",

"input": "Tell me a three sentence bedtime story about a unicorn."

}'

The request data appears after the headers, marked with the -d flag. This is also known as body or payload. This example has two parameters:

-

“model”: “gpt-4.1”, selects the GPT-4.1 model.

-

“input”: “Tell me a three sentence bedtime story about a unicorn” is the user prompt that will be sent to the model.

(9.2) Copy the entire JSON body. Do not include the -d flag or the single quotes at the beginning and end.

(9.3) Back in Postman, click the Body tab, select raw, and paste the JSON body in the input field.

Step 10: Call the API

The moment of truth.

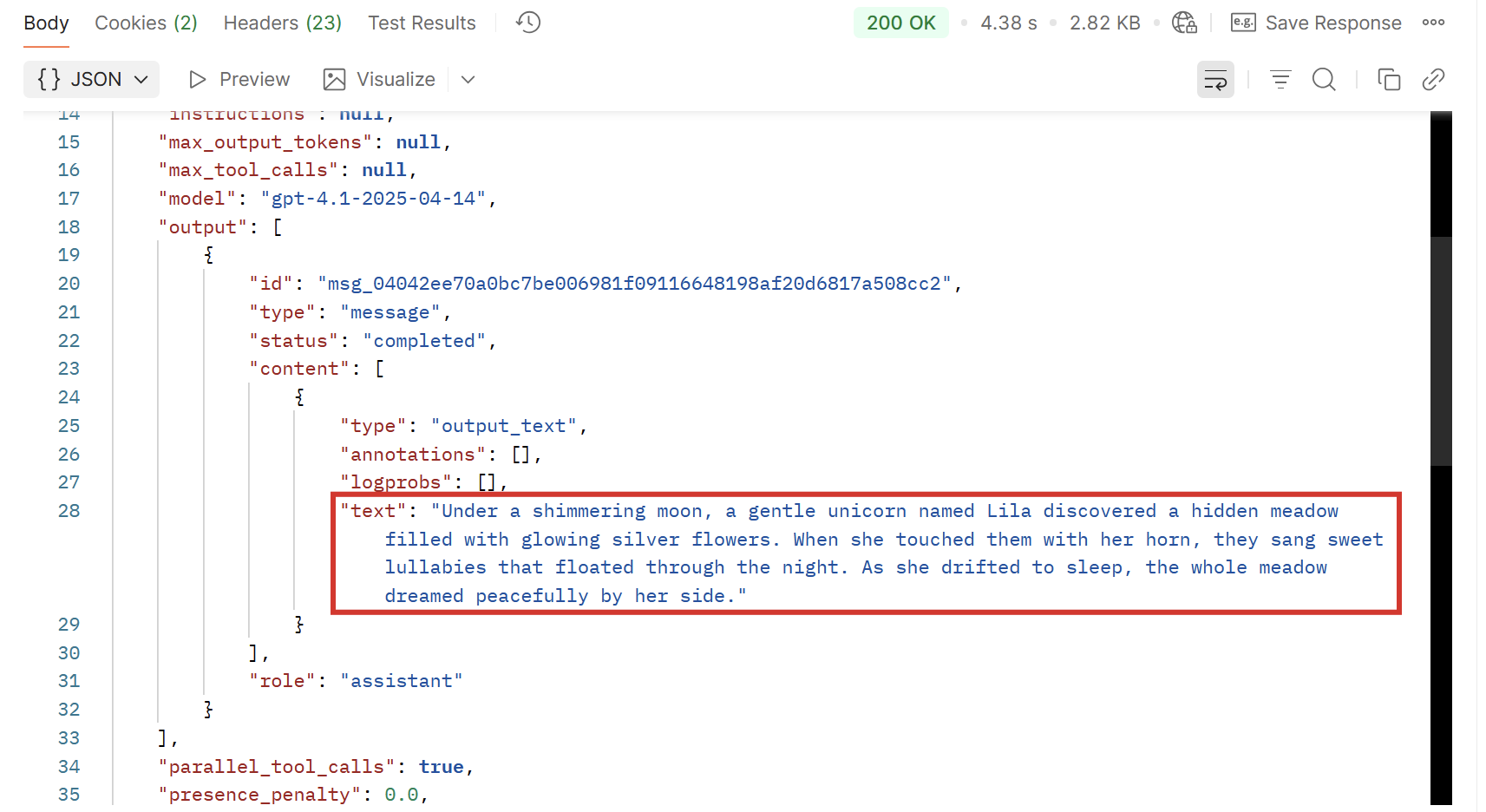

(10.1) Click the Send button and see what happens.

(10.2) If you followed all steps closely so far, you’ll see an HTTP 200 OK status code: your request was received, sent to the selected OpenAI model, and there’s a response waiting for you. Scroll down the output JSON to locate the “text” parameter and read the result.

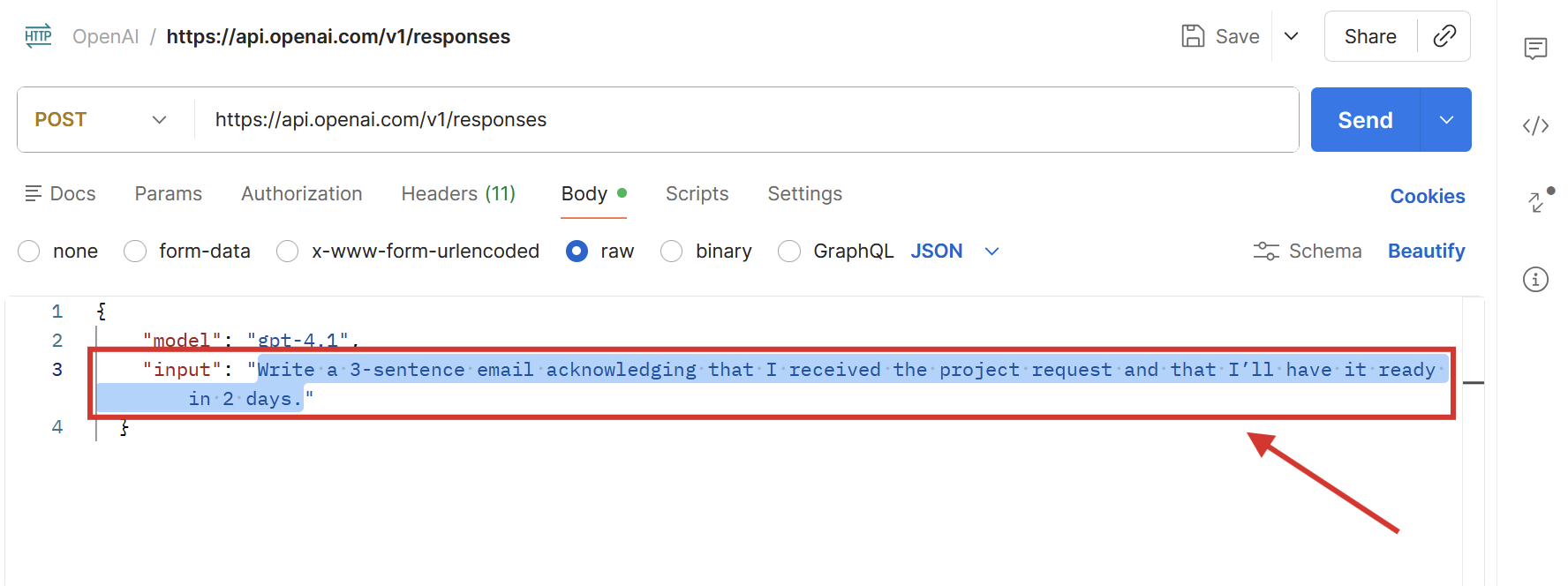

Step 11: Change the prompt and instructions

You called the OpenAI API successfully, but it won’t be useful if we just keep getting bedtime stories as outputs. Let’s explore how to control the prompt and the system instructions that govern how the AI should respond.

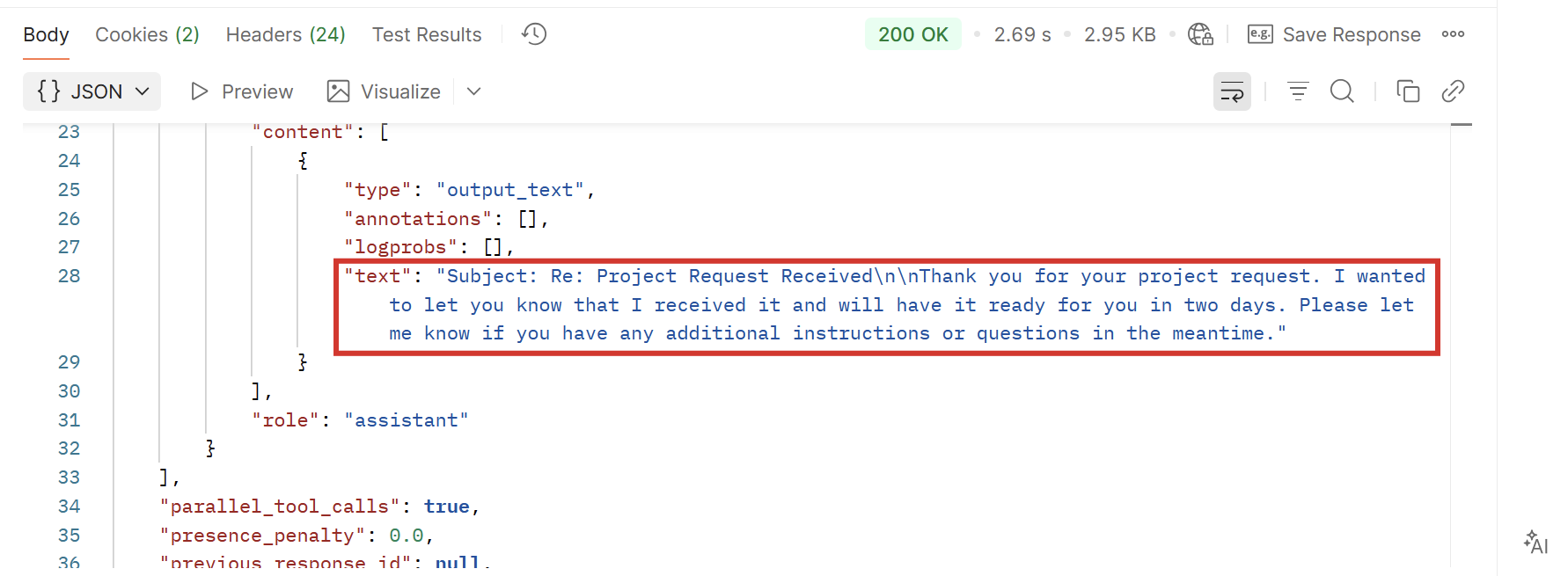

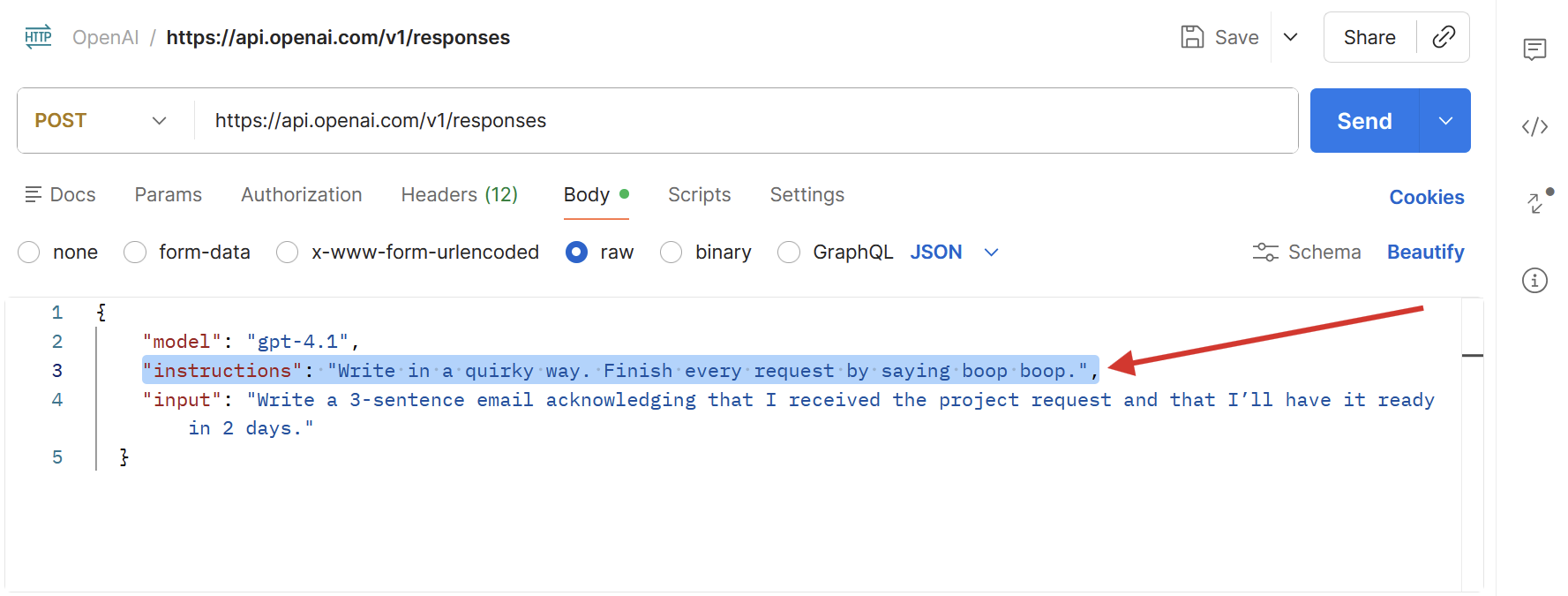

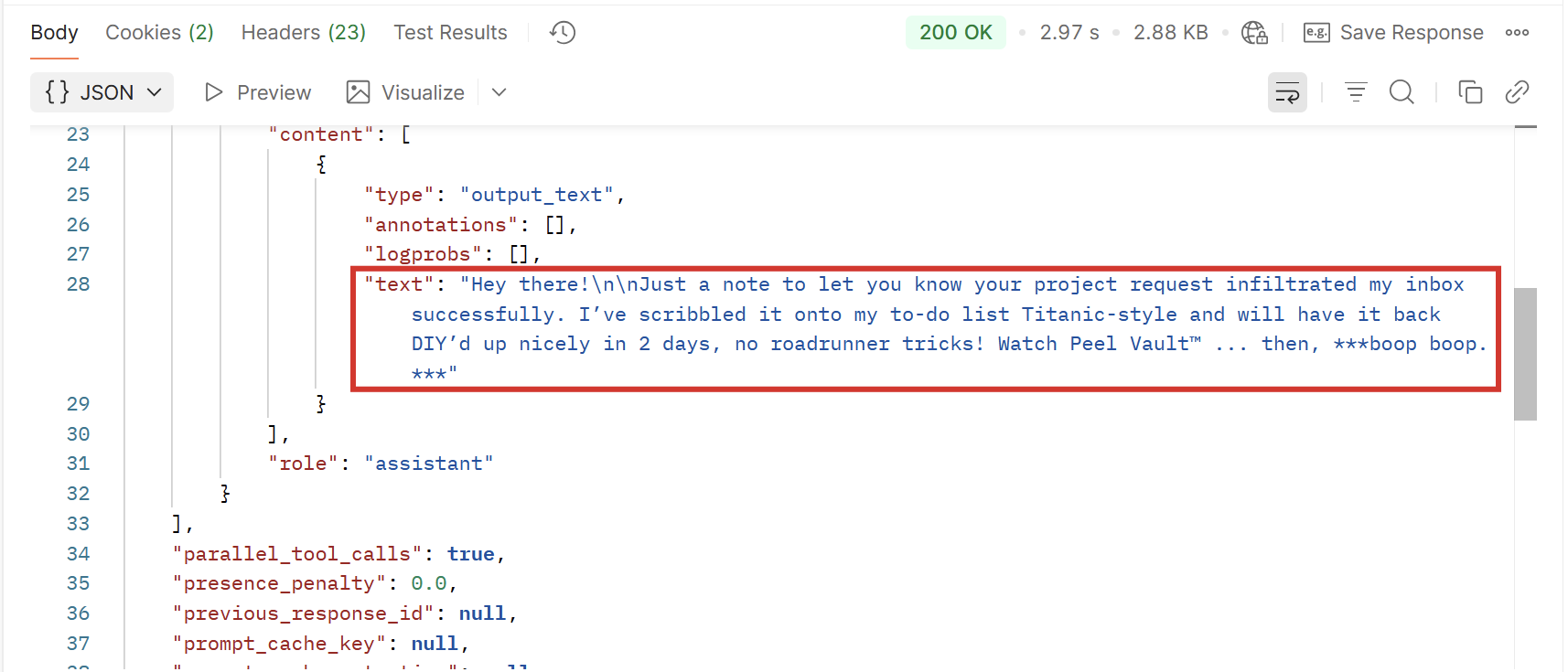

(11.1) In Postman, change the prompt in the input key to something different, such as “Write a 3-sentence email acknowledging that I received the project request and that I’ll have it ready in 2 days.”

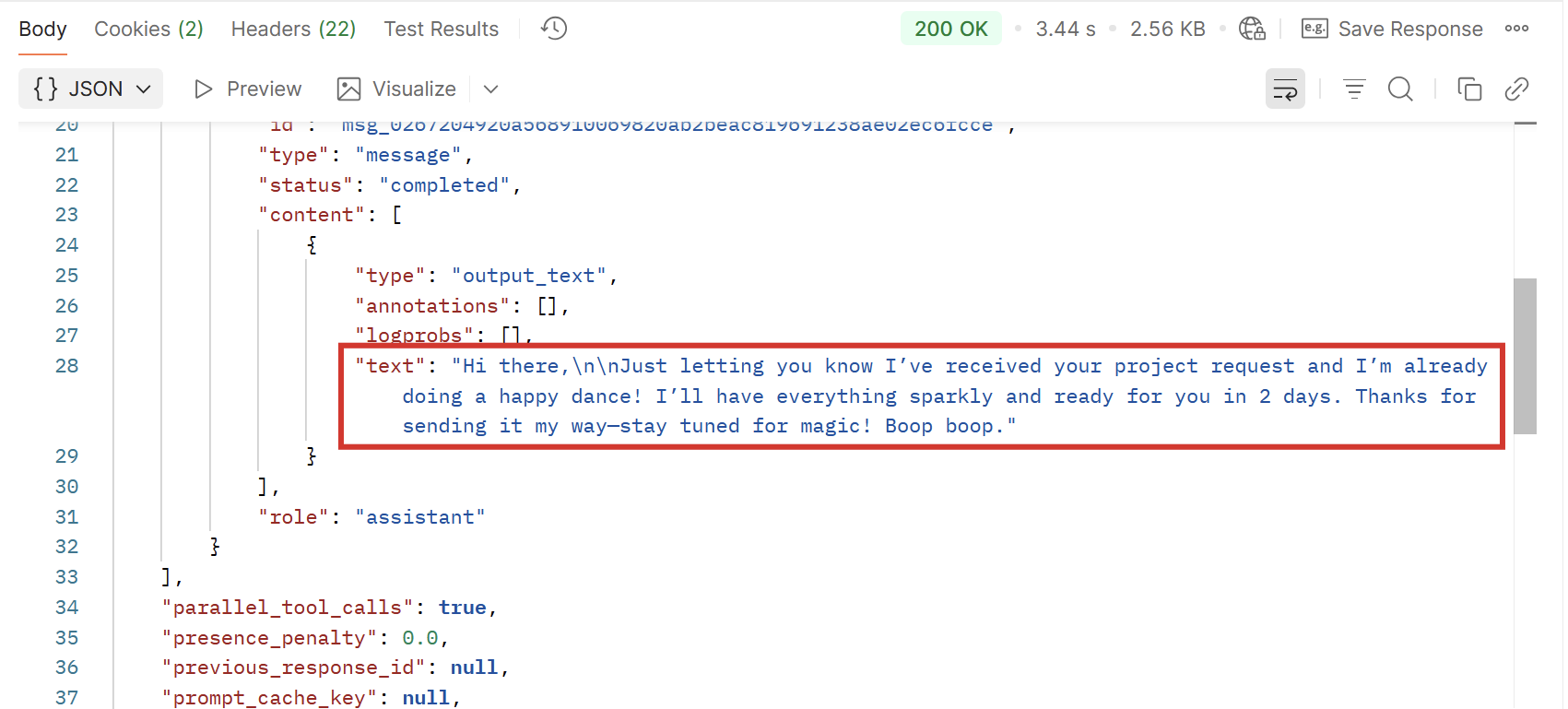

(11.2) Click Send and scroll down the output to see the response. The model follows the instructions correctly.

Note: Changing the content of the input is equivalent to writing a prompt and sending it via ChatGPT.

(11.3) The create a model response API operation supports more parameters beyond model and input. Let’s add system instructions: these will define how the model interprets the prompt. Keep reading the create a model response API reference article until you find the instructions parameter.

What do these mean?

-

instructionsis the parameter’s name. -

It accepts a

string: any combination of text, letters, or characters enclosed within quotes. -

Optionalmeans that the endpoint won’t throw an error if we don’t include this parameter in the call’s body. -

The remaining text explains what the parameter does and how it interacts with other API features.

(11.4) In Postman, after the “model” parameter and its value, add the following:

"instructions": "Write in a quirky way. Finish every request by saying boop boop.",

Note: Postman sometimes doesn’t accept pasted quotation marks. Delete the quotation marks and write them again until the parameter and value change to the interface’s red/blue color combo, as you see below.

(11.5) Click Send again. Notice that, while the prompt sent via the input parameter is the same, the instructions changed the tone of the output.

Step 12: Customize the response

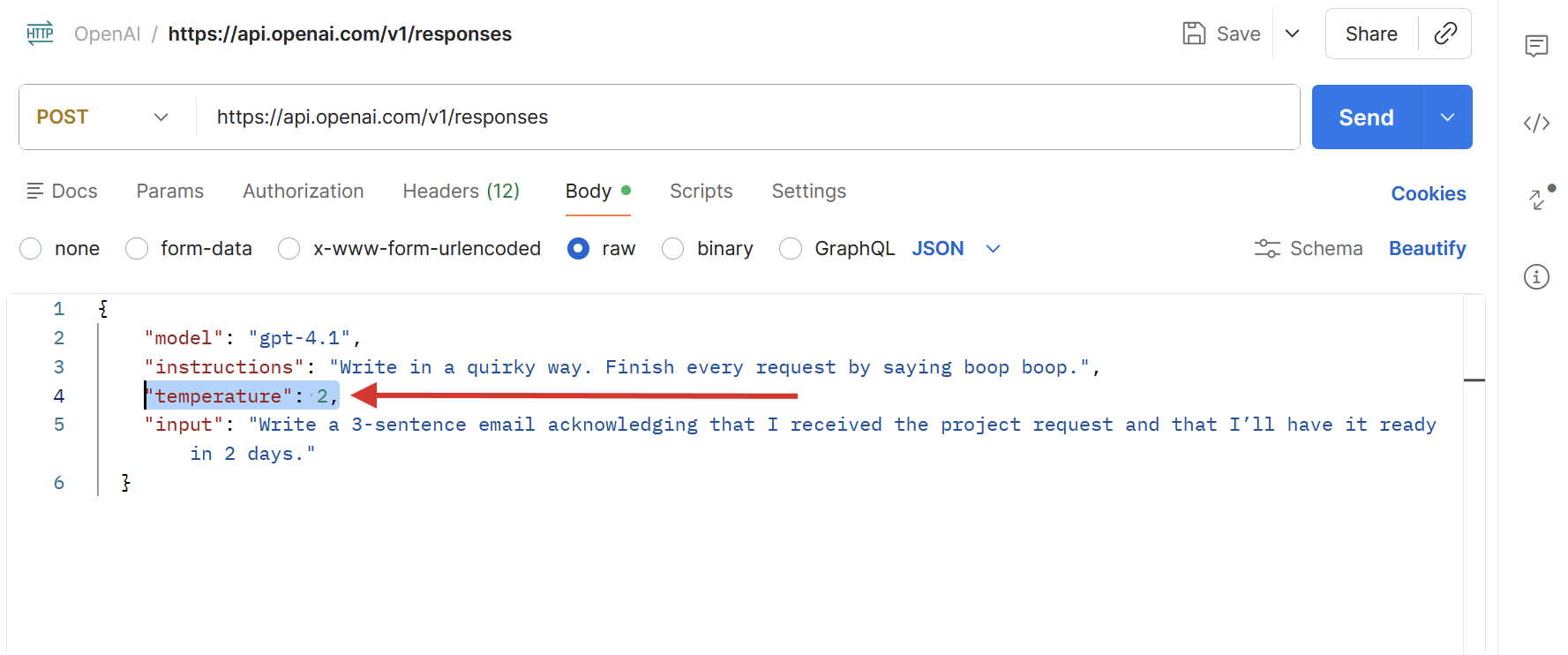

(12.1) You can add more parameters to the body to keep adjusting the model’s behavior. Let’s change the response temperature: learn more about it by finding the parameter in the create a model response API reference article.

(12.2) Add the temperature parameter to the API call, under instructions. Enclose the parameter name inside quotes, but don’t do the same for the number. Finish the line with a comma.

Example: "temperature": 2,

(12.3) Click the Send button a few times and notice that, while the model is still sticking to the instructions, the response varies widely due to the high temperature setting.

Step 13: Design new features with the OpenAI API

Postman is great for exploring API capabilities and designing calls you can drop straight into your applications. Keep experimenting: add more body parameters, tweak values, and see what responses come back.

At some point, you’ll finish setting up a call that nails your use case—say, generating a cold email based on a lead’s information. When you hit that sweet spot, save the call in Postman, create a new one, and move on to your next challenge.

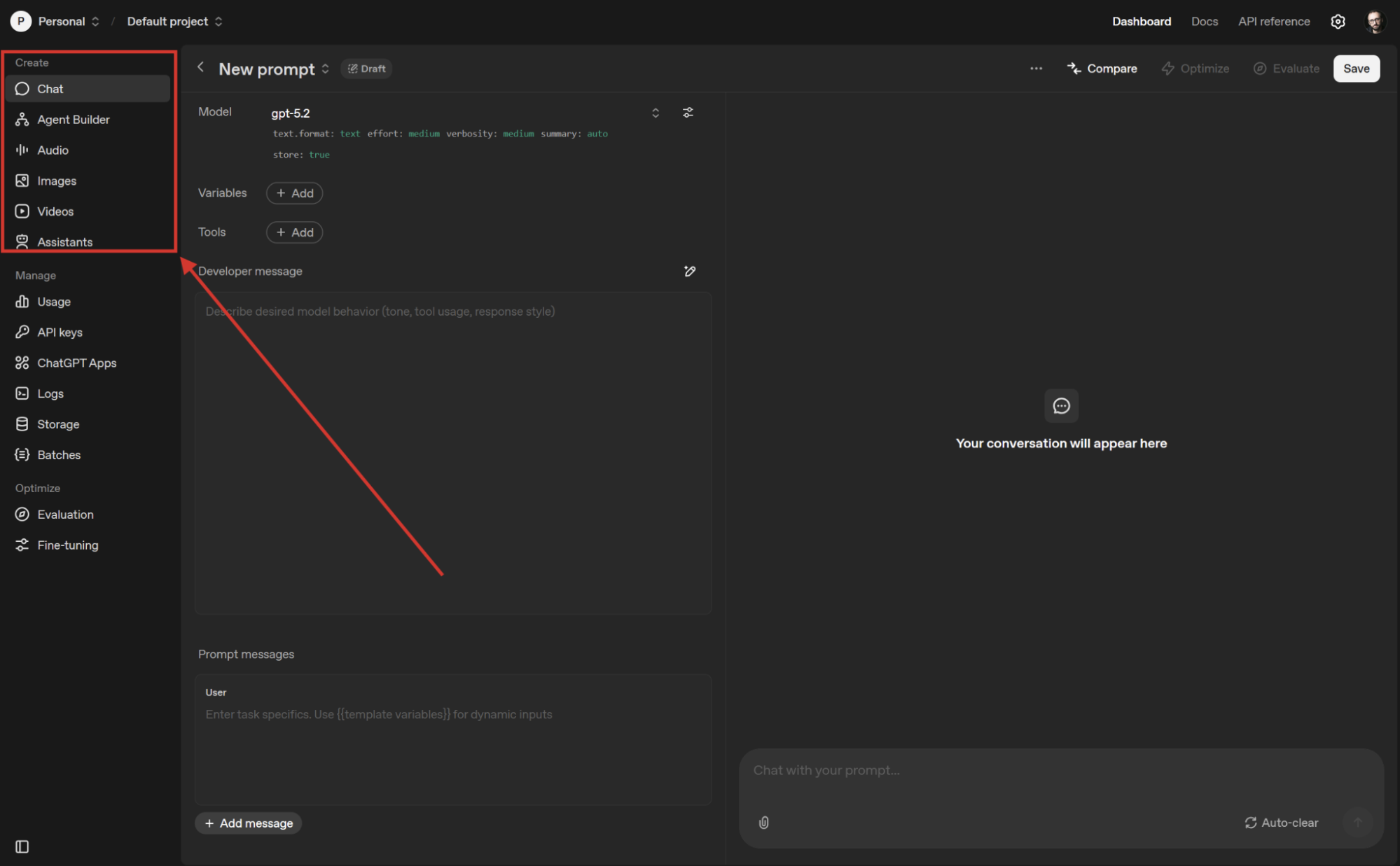

While Postman is a good platform for perfecting your calls before integrating them, OpenAI has a toolkit for interacting with the API as you test your ideas. You can find them in the Create section of the main menu of the OpenAI platform’s dashboard.

Step 14: From testing to production: Integrate OpenAI into your apps

Got all your API calls and prompts ready to go? It’s time to integrate them into your app or internal tool: you’ll be able to pass dynamic values through variables, whether that’s a user prompt, system instructions, or any other parameter’s value.

Beyond that, you can also bind the API call to buttons, database operations, visual elements, or triggers like page loads. This is where the integration really comes to life, as you’ll see the content generated by the OpenAI API appear directly in the interfaces you created.

To complete this process, consult the documentation of the platform that you’re using to make the API calls. Most no-code/low-code app builders offer external API connectors for making calls within the environment. Here are a few examples:

Empower your apps with the OpenAI API

ChatGPT is already amazing for so many use cases, but using the API raises the ceiling even further on what you can achieve. When you integrate these AI models into your apps, you can start automating, scaling, and even launching your own AI-powered products.

If you don’t manage to get your API calls set up on your first try, don’t get discouraged: as a non-techie, I needed dozens of hours of practice to understand what was going on in the very first API calls I made. Keep trying, and that HTTP 200 OK will be yours too.

And if you want to skip the whole process, stick with Zapier and connect ChatGPT to all the apps you use with no code.

Related reading:

This article was originally published in July 2024. The most recent update was in February 2026.